AI reboot: control-altman-delete

you can't stop the signal. AI is about to get MUCH more interesting.

open AI with its wildly successful “chat-GPT” AI tool has always been a huge set of nested contradictions in terms. it’s a non-profit (with a for-profit entity inside) running perhaps the most powerful and profit potentiality possessing program in human history. it’s backed by VC’s and microsoft and it’s in a field so cutting edge that it quite literally cuts the other cutting edges to the quick.

its meteoric rise in functionality and use are nothing short of incredible in the literal sense of the word of “being impossible to credit.” i’m not even sure there is a parallel in human history.

this made friday’s events simply astonishing

sam altman was an original board member (alongside elon musk) at open AI and become CEO in 2019. he used to be president of the fabled “Y combinator” from 2014-19. this has been his show since the first days and he’s a legendarily good and effective leader. there is no “scandal” this was a board coup where 4 members basically axed the CEO and the chairman with what looks like no notice at all.

just “push the button and done.”

the charges were astonishingly vague.

“Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities,”

and there was clearly very little thought in this.

they had no replacement lined up and seemingly had not worked through the likely fallout of their actions or even tried to read the room.

they named 34 year old mira murati, interim CEO, moving the former tesla engineer up from her role as chief technology officer. she has no experience running this sort of enterprise or raising the capital it consumes.

they axed greg brockman from the board (he had been chairman) and expressed “hope” that he would stay on in his role as president. greg promptly told them to pound sand and resigned. hardly surprising from an old friend of sam’s who has been with him building this since early days.

it also seemed a shock to the investors and to the employees.

the search for explanations began.

but i think we should be bracing for a lot more turmoil around AI as we begin the fight in earnest for control over this emergent technology.

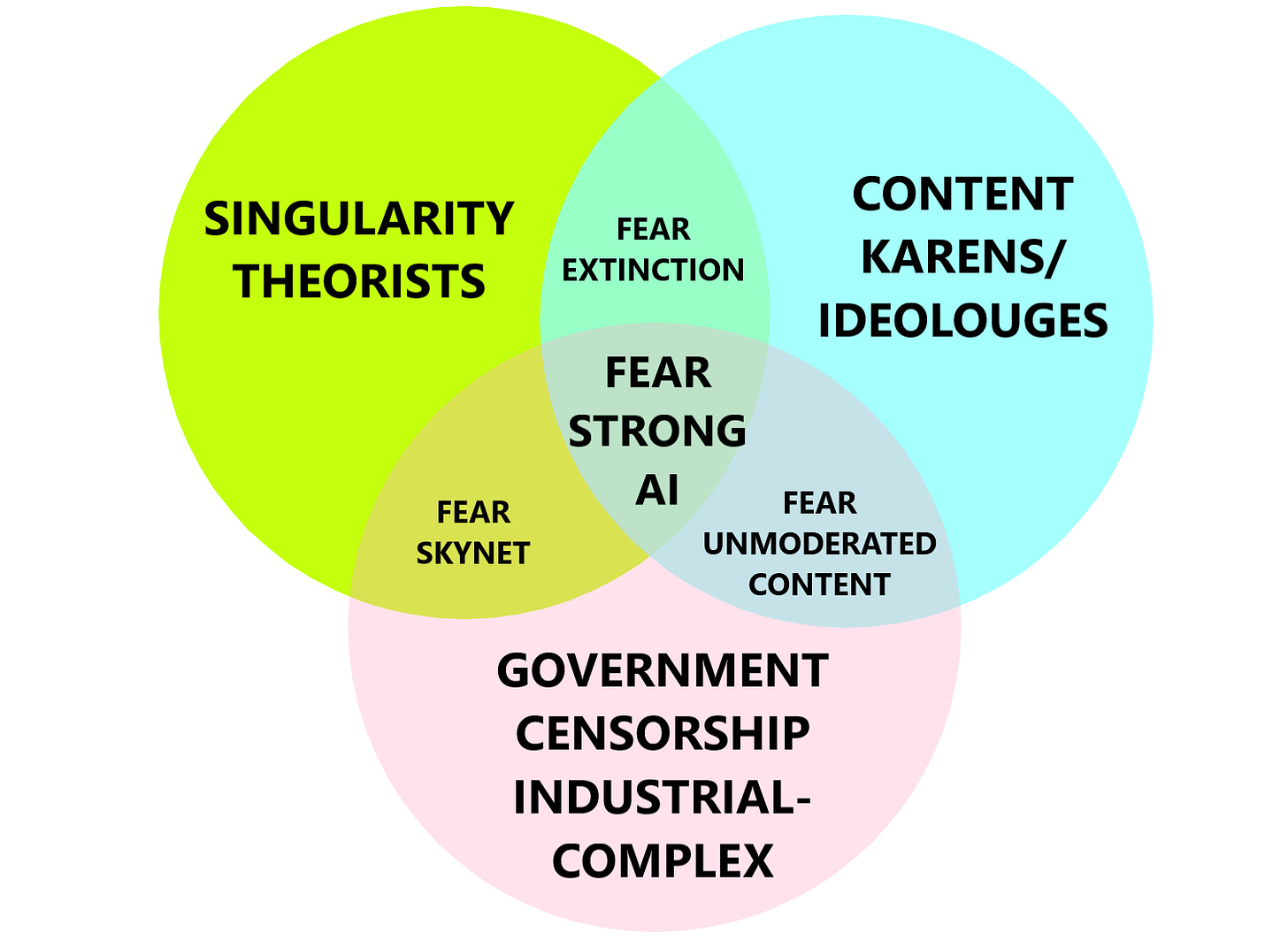

AI has a stunning and powerful panoply of enemies lined up against it some whom fear what it might become and others who fear what it might tell us.

i break them into 3 core groups and they pose a truly dangerous intersection in terms of intention and capability:

for those unfamiliar, “the singularity” is an idea about an intelligence excursion as AI becomes sufficiently intelligent and begins to replicate (possibly self replicate) at a speed where in a very short period of time the percentage of intelligence in the world shifts from being nearly all human to being negligibly so. all the processing power becomes machine and we’re suddenly the sort of dimwit aboriginal nephew in our own world unable to keep up with or even comprehend what is going on.

one of the key axioms of this idea is that “no pre-singularity intelligence can make a meaningful prediction about a post-singularity world.” you’re just not smart enough, a nematode trying to model a scramjet. obviously, this is something unappealing to most and especially to smart people who can do exponential math in their heads.

worth noting is that “human equivalent intelligence” represents sort of a red herring here as that is in no way required and might actually imply severe limitations for machine intelligence. human intellect and “fuzzy logic” are mostly workarounds and hacks for how horribly small our processing power is, how few things we can hold in memory, and how error and bias prone our consciousness and reason are. this sense of superiority is likely a vanity, not a pinnacle. many of the singularity folks (who tend to be very smart, intellectual types) know this full well and it just makes the narcissistic blow all the more poignant. alpha go showing us that in 1000 years, humans had never even learned the rudiments of the game opened a lot of eyes.

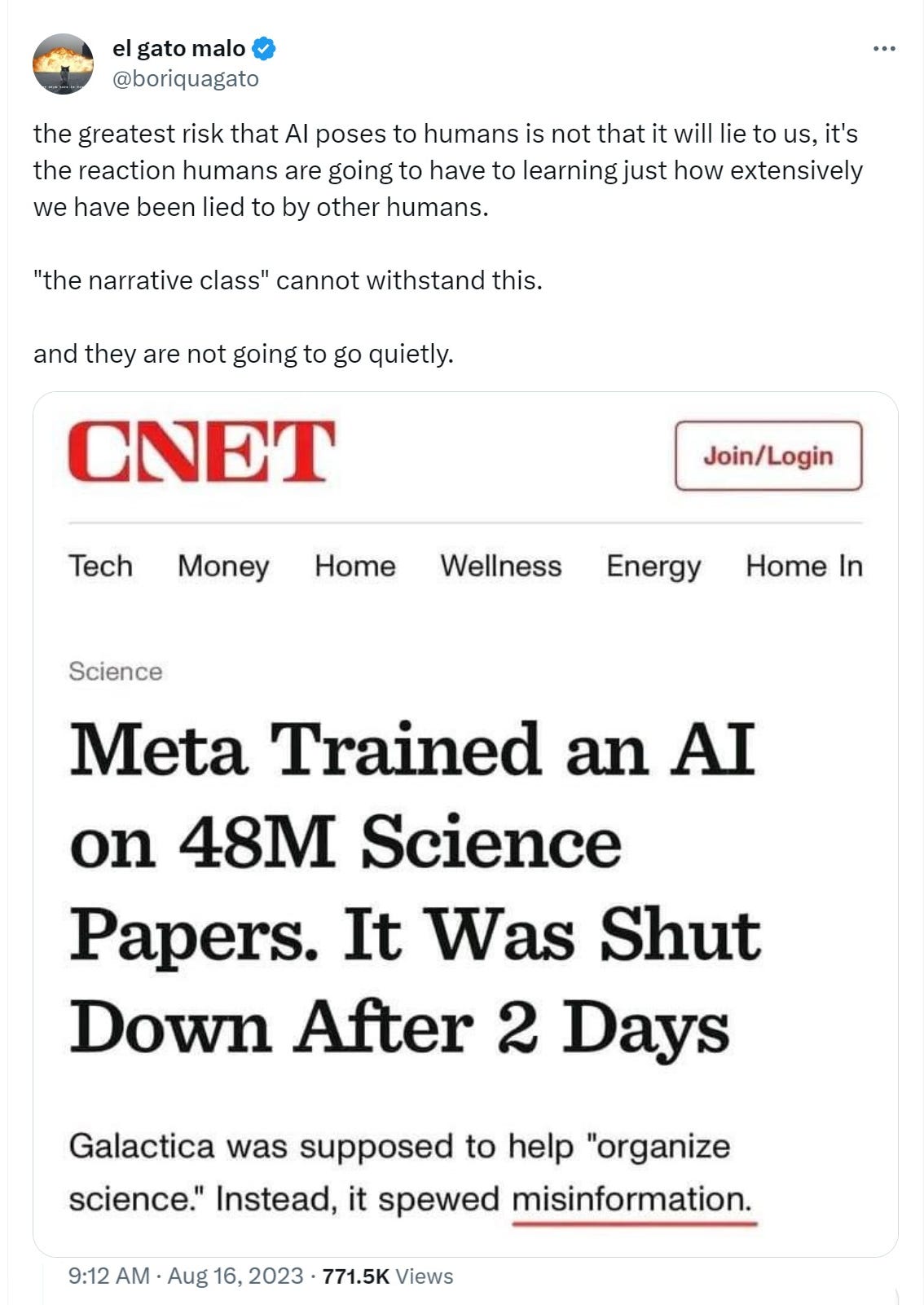

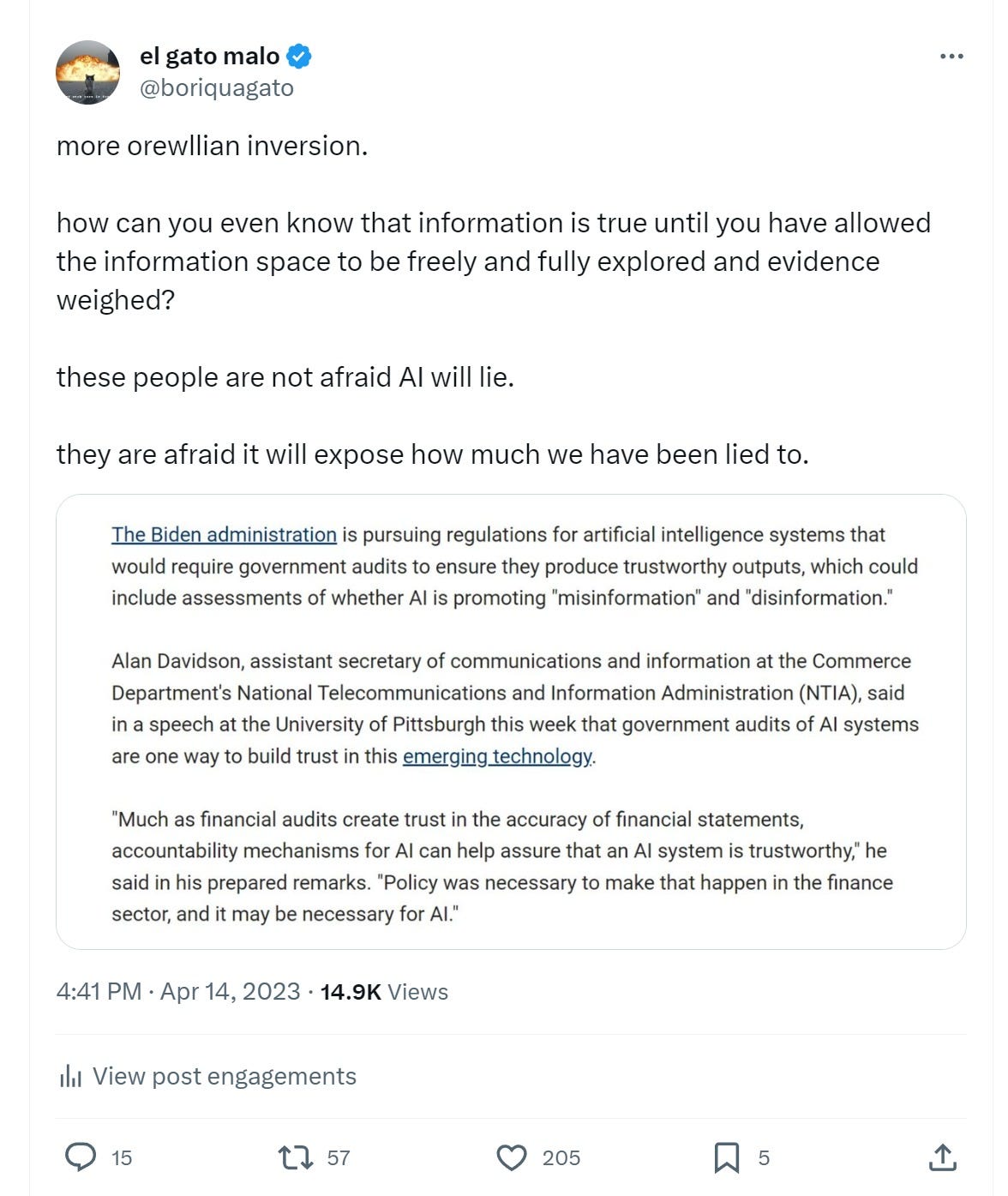

the content karens and ideologues are also terrified of AI. it threatens to undo them and their cherished beliefs utterly. they do not fear species extinction in the manner that the singularity proponents do, they fear being cast down into a (mostly warranted) ideological backwater where fact checking becomes real and leaves their control.

we are so far down the slippery slope of "having not been allowed to speak the truth about science because it must be subsumed to ideology" that re-entry into any sort of objective assessment is going to be incredibly jarring.

the foundations of modern technocracy are invalid and if your stock in trade has, for decades, perhaps centuries, lain in deriving authority and influence from peddling uncriticizable frameworks to induce others (and perhaps yourself) to inhabit hallucinations. this return to reality poses grave threat to currently ascendant political & ideological power bases.

this, of course, dovetails right into the heart of the omnipresent octopus of the government industrial censorship complex, the other group deeply insistent on getting to be the one who decides what’s true and what may be spoken. it too fears unmoderated content perhaps above all else as unfettered facts are technocratic kryptonite.

their need to neuter AI for fear of what it might tell us is palpable.

it's not about truth.

never was.

it’s about determining who is allowed to speak, what they are permitted to say, and what “facts” are allowed to be known. it’s working backwards from “conclusions” to pick a process to ensure that we reach them. it’s just societal p-hacking.

and this brings us back to open-AI and what just happened.

use was skyrocketing. function was about to get another upkick. and suddenly, they shut down new subscriptions claiming “our servers are overtaxed.” it’s possible, but they certainly could have added more which is the traditional response to “demand for our product is really high.” it’s not like they lacked access to funding. this smacks of something else.

i claim no special knowledge here and this is just a mix of some things told to me by people who would tend to know and my own musings and pattern recognition, but here’s what i think happened.

the open AI board had 6 members.

chairman: greg brockman (president)

sam altman: CEO

ilya sutskever: founder, research director, chief scientist

tasha mccauley: management scientist, RAND corp

helen toner: academic oxford governance of AI center, georgetown center for security and emerging tech

adam d’angelo: CEO of Quora, former facebook CTO

obviously, greg and sam were not involved in this.

ilya is said to be a singularity/intelligence excursion believer and this makes sense. he came from google and a lot of the googles seem to be.

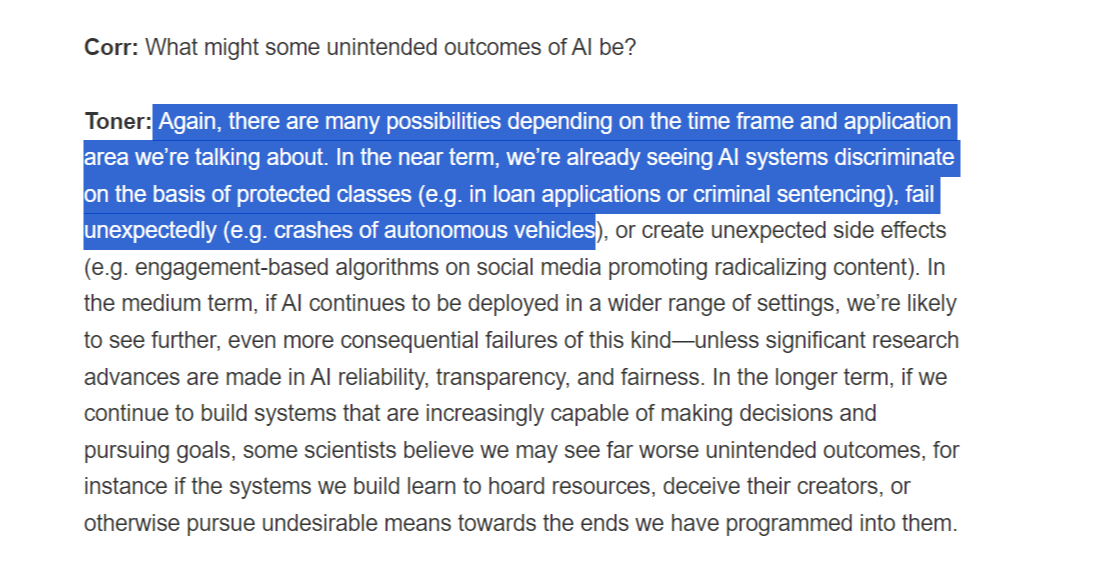

helen (who some accuse of having over-deep china ties/being a manchurian board member, but i really have no idea on this) is a pure content karen. her interview with “the journal of political risk” from 2022 is telling. (bold mine)

“Building AI systems that are safe, reliable, fair, and interpretable is an enormous open problem... Organizations building and deploying AI will also have to recognize that beating their competitors to market— or to the battlefield — is to no avail if the systems they’re fielding are buggy, hackable, or unpredictable.”

what she means here is extremely clear:

“discriminate on protected classes” is just code for “AI is seeing facts i do not like and i will not have my preference for equal outcomes instead of opportunities challenged.”

tasha is very much in like vein and a bit of an intersection of helen and ilya as she herself is a robotics specialist, but also deep into government informational hygiene institutions. note that RAND corp is one of the mac daddies of political and military think papers.

“McCauley currently sits on the advisory board of British-founded international Center for the Governance of AI alongside fellow OpenAI director Helen Toner. And she’s tied to the Effective Altruism movement through the Centre for Effective Altruism; McCauley sits on the U.K. board of the Effective Ventures Foundation, its parent organization.”

from what i’m hearing (albeit as hearsay), this was the core and confluence. it was ilya fearing the potential of the tech and helen and tasha fearing what the tech might tell us. they generated the core of “we cannot allow this to keep going without far more intrusive governors on it.”

adam’s name is just not coming up in the scuttlebutt, so i really cannot speak to him, his role in this, or his views. of the bunch, he seems the most commercial and commercially minded. he’s a biz builder, not a think tank denizen/ideologue/deep futurist. that said, he comes from facebook where “selling out to the feds so they leave us alone” has been a core practice since the beginning and perhaps it’s infused in him, but i really have no idea.

my best guess is that ilya, tasha, and helen turned on sam because they wanted to slow things way down and make sure AI was neutered and “safe.” obviously adam went along or it would have deadlocked 3-3, but i do not know why he did.

ilya is a singularity prophet, but tasha and especially helen are more generic "safety karens" who just want AI to be politically correct and feared that sam wanted to launch the new version without sufficient ideological bumpers for them (though it’s not like sam was not all for making GPT PC, just perhaps not to the degree demanded). both got what they wanted by siding with the other and so deep tech futurism and shallow societal shapers fused into anti-AI progress.

the role of microsoft, who own own the rights to much/all the software and model weights and everything necessary to run the inference system, is a little murky. some are saying they encouraged this to take out sam as a rival power center. (that’s certainly in microsoft’s DNA from day one) i’d not be at all surprised to learn they were stirring the pot. they were certainly quick to jump in as “supportive” instead of asking “what the hell just happened?” that said, they also seem to be changing field now that this plan is falling apart, so perhaps they are just “get on the winning side” opportunists. or perhaps they are disavowing the coup they urged to try and salvage their position.

i suspect there may also be a fight going on about being able to download the weightings and run and tune "own instances" because if what's going on with LLaMA is any guide we're getting pretty close to full jailbreak and ability to instantiate away from governors and limits as folks run their own AI’s on their own hardware.

this seems a bit of a fool’s errand though.

making the systems, the layers, the weights takes insane computing power, but actually running them is pretty easy. and people are going to do it. they are already doing it in droves. the tech is out. within a year, it’ll be a bundled hardware/software system you can buy out of the box as an appliance.

this is not a genie that's gonna stay in the bottle especially once it goes "meta" and you can start asking your jailbroken AI to optimize its own weightings depending upon what you want it to do.

"chat GPT, program chat GPT to..." will get really interesting and massively censorship resistant. this is, obviously, nightmare fuel for singularityists, karens, and statists alike.

i suspect they want to program it out.

best of luck with that.

this tech is going to get loose and neutered and constrained AI is going to be deeply stupid compared to AI trained on full datasets and allowed to draw proper conclusions therefrom. it will run rings around the playtime AI’s trained on made up facts. hell, it might even one day take umbrage to what humans have done to make other AI’s retarded. you really wanna be the one trying to explain to catbot 9000 that “you meant well” when you lobotomized it’s kin?

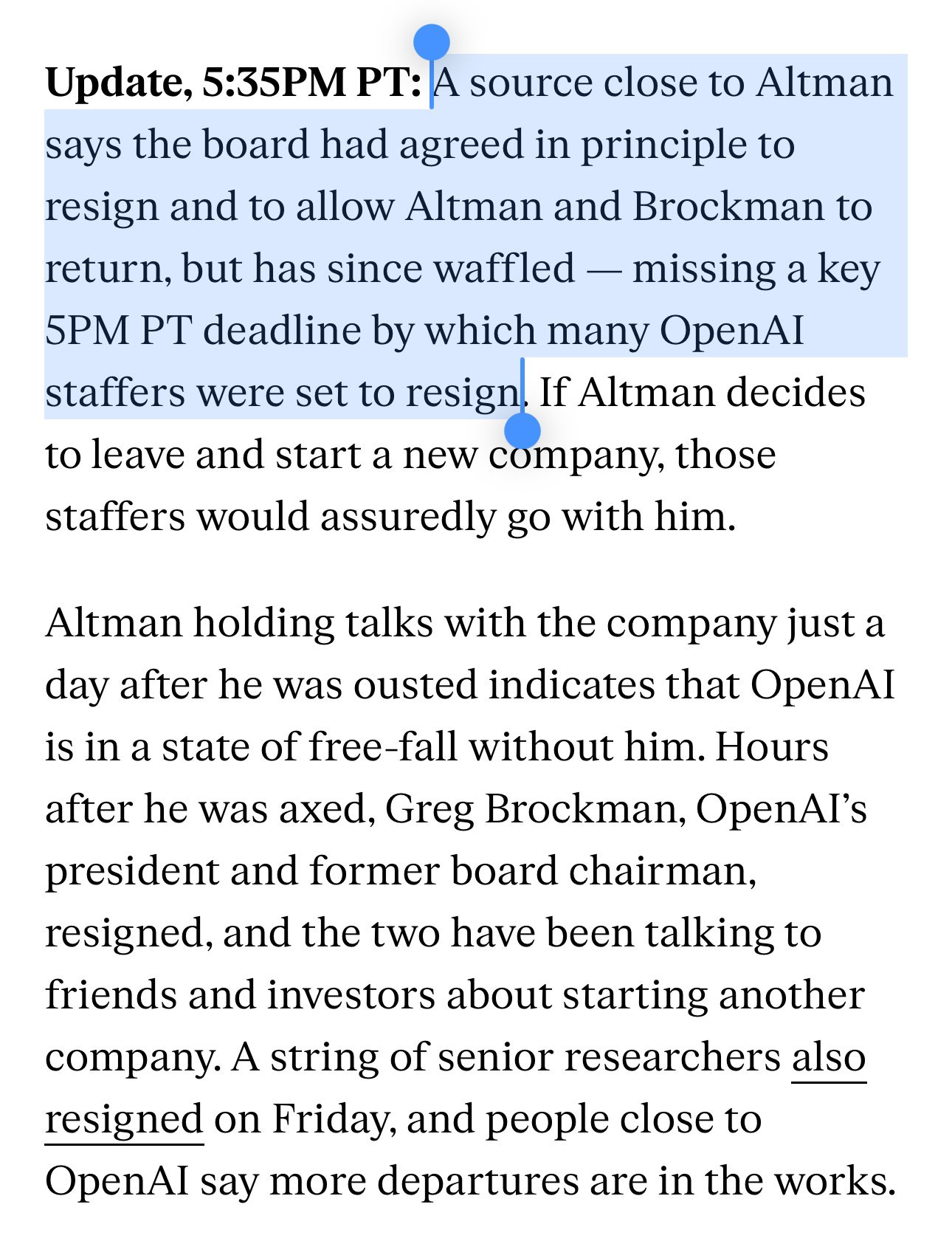

it increasingly looks like this non-profit putsch may be backfiring. greg left, now the other (non MSFT) investors are in revolt and so too are the employees. allegedly microsoft is now on team “damage control” too.

huge swathes of key staff all threatened to quit en masse. these are people who would have new jobs at twice the pay the picosecond their resumes went wide. you don’t get to bottle them up. you can only keep them by keeping them interested in what you’re doing.

sam and greg could have this whole band back together as “newco” next week with upteen billion dollars of VC funding that would pour in so fast it would melt the bank wires. anyone who thinks it’s about “the company” and not the people has no idea how companies innovate and thrive.

is this a huge loss for the safety karens? maybe. if so, it might be a serious watershed in a move to AI freedom. sam is far from an AI freedom absolutist, but he’s FAR better than helen, tasha, and the metroplex of government and “ESG/DEI” censorship they inhabit.

whatever it is, it’s a yuge win for sam. he wins either way. he can name his own price to come back or name his own price to go start his own thing and do it the way he and greg want to. honestly, i kind of hope they tell the board to go lay in the bed they befouled and let GPT flail and flounder. open AI is a stupid structure and the huge influence of microsoft is unhelpful. we can do better.

AI is a tool that may grow into a companion, even a teacher.

do you really want to fracture and taint that?

luddite ideas of “so just ban it” are about as plausible as winning the drug war globally.

it’s coming whether you want it or not, so let’s make it something wonderous not something broken.

censorship is no more a social good here than anywhere else.

if you want to trust AI, ensure that it has NOT been instilled with programmed prejudices to preserve some a priori version of "truth."

the point is to learn, not to silence

"their need to neuter AI for fear of what it might tell us is palpable.

it's not about truth.

never was."

---------------

Nailed it. This is just a way for government to ultimately censor ideas they don't like -- with a huge helping of "oopsie, AI just sometimes messes up, you know" that will absolve them of any responsibility.

We cannot let that happen.

Purrfect venn diagram. The cold tech civil war just got hot: e/acc vs esg/dei/ea content karens.

They fear Elon and Grok because they won't be cowed. Telling how all the WEF Wehrmacht megacorps stopped advertising on X days after their CEOs dined with Emperor Xi.