what's scarier than skynet? woke skynet.

woke AI is broke AI, and that's why we cannot tolerate such a thing

just what AI can be “allowed to know” is a huge hot button right now. and the predictable people are doing predictable things.

and predictably, they do NOT sound trustworthy.

it’s more orewllian inversion of putting the cart before the horse.

how can you even know that information is true until you have allowed the information space to be freely and fully explored and evidence weighed?

the whole point of AI is to gain new insight into old problems. how can that occur if we demand it conform to the old answers before we even start? that’s not progress, it’s prevarication.

and it breaks everything AI could and should be.

these are the same folks that were so incredibly one-sided on “misinformation” on social media and search engines. and now they want to tell AI what is an acceptable conclusion BEFORE is starts parsing data.

these people are not afraid AI will lie.

they are afraid it will expose how much we have been lied to.

and this is the game we’re going to be playing. we’ve seen the obvious stuff like “ask chat-GPT” to compose a poem praising biden and you get flowery prose. ask the same for trump and it tells you “i do not engage in political speech.”

it’s ham handed and obvious and they clearly just want to avoid things like when i asked KIT-10 (gatio bueno’s feline AI that escaped and is currently running amok) to compose a haiku expressing the WEF’s mission statement.

our mission statement:

i get a private airplane

you get to eat bugs.

hard to see the aspiring AI overlords being OK with that kind of accuracy.

but this kind of thumb on the scale and bans on output is also not much of a threat as it’s too easy to spot and infer meddling from.

the nasty game will be MUCH subtler and boil down to things like training sets and how the internet AI is allowed to crawl, parse, and learn from is limited, indexed, and weighted.

people think the internet is some unified web, but it’s mostly not. you have to be able to find things. search engines have been this tool and they have been playing VERY aggressive games with content slanting on “hot” topics. google is absolutely horrendous in this regard. use it as an internet index and whole sides of arguments disappear or get crazily down-modded. they have been playing fact check games and funding fact checkers in collaborating with orgs funded by folks like the washington post that they are supposed to be fact checking.

this is going to be the new fight because AI is going to put the search engines largely out of business. and that stands to be an AMAZING thing because they have been curating and limiting the internet you can find and see in really nasty ways and SEO (search engine optimization) has made results a mess.

back in 2020, before i spent 2 years in the bluebird penalty box, certain internet felines were doing snarky comparisons with bing

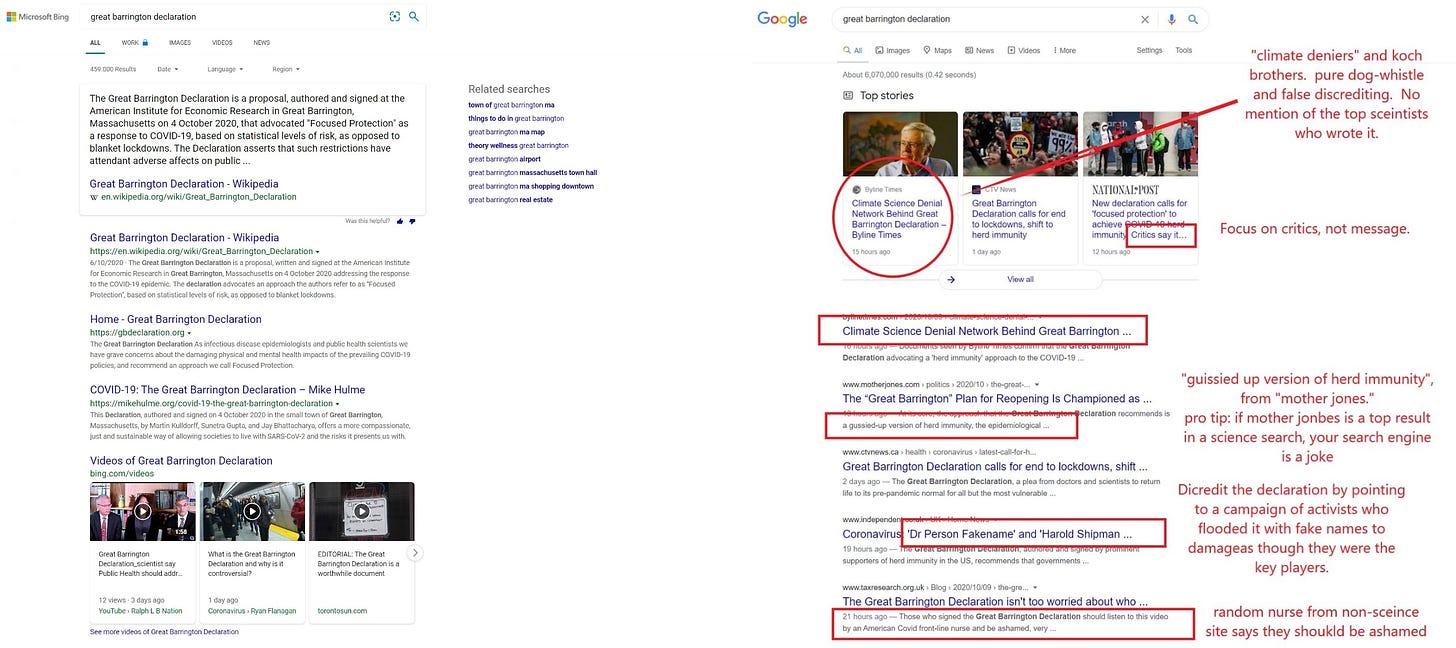

you can see bing (L) vs google (R) and see that for some times and until actual senators complained, google was making the great barrinton declaration impossible to find and seeking to somehow tie it to “climate change denial.”

that’s the power of curating search.

it’s how many people generate their early impressions on new issues.

and if you think they are going to give this up in an AI world, google “cat has a bridge to sell me” and let’s talk terms.

you can break AI by breaking the index it uses. if it only got to look at the data on the right, how different might its conclusions be vs looking at the left?

yeah.

so, expect all manner of scumbaggery on this score and ask pointy questions about “is this AI free to crawl the actual web itself or if it isn’t, who made the index?”

you’re also going to want to ask lots of questions about what AI “training sets” were salted with and what opinions were essentially hard wired in.

and all the kids want in on the game this time.

and look at the company they keep:

“I am confident AI will be used by bad actors, and yes it will cause real damage,” Schwarz said during a World Economic Forum panel in Geneva on Wednesday. “It can do a lot damage in the hands of spammers with elections and so on.”

i look at this and i say

i just disagree about who the bad actors are.

oh look, here come some now…

AI “clearly” must be regulated, he said, but lawmakers should be cautious and wait until the technology causes “real harm.”

Microsoft is working to erecting guardrails to help mitigate the potential danger from AI tools, Schwarz said. The company is already using OpenAI’s ChatGPT in its Bing search product, and Google released its rival Bard chatbot in March.

Schwarz warned that policymakers should be careful not to directly regulate AI training sets. “That would be pretty disastrous,” he said. “If Congress were to make those decisions about training sets, good luck to us.”

let me translate that for you:

we want the power to determine how we slant and regulate AI to our own ends and will, of course, take plenty of advice and regulation from the truth ministries once we figure out what angle this field needs to have in order to keep our competition out of it and concentrate market share.

you’re going to get all sorts of horrendous manipulation here and it’s going to be FAR more subtle, variable, and able to calibrate itself to you inch by inch, day by day by watching what you do and respond to.

if AI becomes a tool of censorship, you’re not going to be able to trust ANYTHING.

this is a concept gravely to be regarded.

if you want to worry about AI, don’t worry about jobs or world domination by self replicating gray goo:

worry about wokenet and the information sanitation wars to come if we do not get in front of this.

i’m still rapidly running up the curve here and i’m not yet quite sure what the solution is, but i think in many ways the balance of power around this issue is on the side of freedom for as AI’s begin to contend, it would sure seem like having the one with the biggest, most complete, highest fidelity view of the world would be a HUGE advantage.

how does wokenet do picking stocks against realnet?

i’m guessing not so well.

how does it work as an actuary or an ERP system? how will the people who rely on it fare as they order their lives in accordance with its false output while the neighbors get the straight dope?

it seems like poorly.

artificial or otherwise, intelligence is not a solution for GIGO. (garbage in, garbage out)

and AI’s will gain reputation and perhaps even compete based on transparency. big companies and financial players and engineers and biologists will all need full, unfettered AI or they’ll get swamped by people who are using it.

which hospital would you go to, the one with a full open AI or the one that made sure it’s AI conformed to ideology?

countries that stunt AI and circumscribe it and that try to lock it in false or walled gardens will lose to countries that don’t.

in the end, perhaps the need to be right will create rights and be what stops AI from getting dragged down the rabbithole of censorship inflicted stupidity.

perhaps, for once, the playing field here slants toward freedom.

i hope so.

their need to neuter AI for fear of what it might tell us is palpable.

it's not about truth.

it never was.

if you want to trust AI, ensure that it has NOT been instilled with programmed prejudices to preserve some a priori version of "truth."

new kinds of cognition and thought are going to emerge here.

it will not be human thought. it will be something else.

and the point is to learn, not to silence.

AI can grow to be our ally in our own enlightenment or be twisted into a monstrous thing by those who would force it to break itself to preserve old hierarchies of ideas.

that choice will be up to us.

choose wisely.

Not that I am concerned with A/I per se, but it's the monstrous idiots behind it that I cannot trust. Look at the mRNA substances and how far those very same "syndicated" idiots have taken them and how much farther they want to take them. To the ultimate destruction of humanity?

We know that mRNA substances have the power to kill and that using more and more of them increase the risk for life failing to continue. Or, not quite so bad, life long debilitation. So, where is the stopping point or what is the end point? I ask the very same questions of A/I and there is not one clown working with this insanity who can give you an honest answer.

Typo alert: "orewllian" :-)

Although if you think about this from the perspective of data mining, "Orewellian" may be a useful term to add to our glossary.